1 Introduction

This book is about using the power of computers to do things with geographic data. It teaches a range of spatial skills, including: reading, writing and manipulating geographic data; making static and interactive maps; applying geocomputation to solve real-world problems; and modeling geographic phenomena. By demonstrating how various geographic operations can be linked, in reproducible ‘code chunks’ that intersperse the prose, the book also teaches a transparent and thus scientific workflow. Learning how to use the wealth of geospatial tools available from the R command line can be exciting, but creating new ones can be truly liberating. Using the command-line driven approach taught throughout, and programming techniques covered in Chapter ??, can help remove constraints on your creativity imposed by software. After reading the book and completing the exercises, you should therefore feel empowered with a strong understanding of the possibilities opened up by R’s impressive geographic capabilities, new skills to solve real-world problems with geographic data, and the ability to communicate your work with maps and reproducible code.

Over the last few decades free and open source software for geospatial (FOSS4G) has progressed at an astonishing rate. Thanks to organizations such as OSGeo, geographic data analysis is no longer the preserve of those with expensive hardware and software: anyone can now download and run high-performance spatial libraries. Open source Geographic Information Systems (GIS), such as QGIS, have made geographic analysis accessible worldwide. GIS programs tend to emphasize graphical user interfaces (GUIs), with the unintended consequence of discouraging reproducibility (although many can be used from the command line as we’ll see in Chapter ??). R, by contrast, emphasizes the command line interface (CLI). A simplistic comparison between the different approaches is illustrated in Table 1.1.

| Attribute | Desktop GIS (GUI) | R |

|---|---|---|

| Home disciplines | Geography | Computing, Statistics |

| Software focus | Graphical User Interface | Command line |

| Reproducibility | Minimal | Maximal |

This book is motivated by the importance of reproducibility for scientific research (see the note below). It aims to make reproducible geographic data analysis workflows more accessible, and demonstrate the power of open geospatial software available from the command-line. “Interfaces to other software are part of R” (Eddelbuettel and Balamuta 2018). This means that in addition to outstanding ‘in house’ capabilities, R allows access to many other spatial software libraries, explained in Section 1.2 and demonstrated in Chapter ??. Before going into the details of the software, however, it is worth taking a step back and thinking about what we mean by geocomputation.

Reproducibility is a major advantage of command-line interfaces, but what does it mean in practice? We define it as follows: “A process in which the same results can be generated by others using publicly accessible code.”

This may sound simple and easy to achieve (which it is if you carefully maintain your R code in script files), but has profound implications for teaching and the scientific process (Pebesma, Nüst, and Bivand 2012).1.1 What is geocomputation?

Geocomputation is a young term, dating back to the first conference on the subject in 1996.1 What distinguished geocomputation from the (at the time) commonly used term ‘quantitative geography’, its early advocates proposed, was its emphasis on “creative and experimental” applications (Longley et al. 1998) and the development of new tools and methods (Openshaw and Abrahart 2000): “GeoComputation is about using the various different types of geodata and about developing relevant geo-tools within the overall context of a ‘scientific’ approach.” This book aims to go beyond teaching methods and code; by the end of it you should be able to use your geocomputational skills, to do “practical work that is beneficial or useful” (Openshaw and Abrahart 2000).

Our approach differs from early adopters such as Stan Openshaw, however, in its emphasis on reproducibility and collaboration. At the turn of the 21st Century, it was unrealistic to expect readers to be able to reproduce code examples, due to barriers preventing access to the necessary hardware, software and data. Fast-forward two decades and things have progressed rapidly. Anyone with access to a laptop with ~4GB RAM can realistically expect to be able to install and run software for geocomputation on publicly accessible datasets, which are more widely available than ever before (as we will see in Chapter ??).2 Unlike early works in the field, all the work presented in this book is reproducible using code and example data supplied alongside the book, in R packages such as spData, the installation of which is covered in Chapter 2.

Geocomputation is closely related to other terms including: Geographic Information Science (GIScience); Geomatics; Geoinformatics; Spatial Information Science; Geoinformation Engineering (Longley 2015); and Geographic Data Science (GDS). Each term shares an emphasis on a ‘scientific’ (implying reproducible and falsifiable) approach influenced by GIS, although their origins and main fields of application differ. GDS, for example, emphasizes ‘data science’ skills and large datasets, while Geoinformatics tends to focus on data structures. But the overlaps between the terms are larger than the differences between them and we use geocomputation as a rough synonym encapsulating all of them: they all seek to use geographic data for applied scientific work. Unlike early users of the term, however, we do not seek to imply that there is any cohesive academic field called ‘Geocomputation’ (or ‘GeoComputation’ as Stan Openshaw called it). Instead, we define the term as follows: working with geographic data in a computational way, focusing on code, reproducibility and modularity.

Geocomputation is a recent term but is influenced by old ideas. It can be seen as a part of Geography, which has a 2000+ year history (Talbert 2014); and an extension of Geographic Information Systems (GIS) (Neteler and Mitasova 2008), which emerged in the 1960s (Coppock and Rhind 1991).

Geography has played an important role in explaining and influencing humanity’s relationship with the natural world long before the invention of the computer, however. Alexander von Humboldt’s travels to South America in the early 1800s illustrates this role: not only did the resulting observations lay the foundations for the traditions of physical and plant geography, they also paved the way towards policies to protect the natural world (Wulf 2015). This book aims to contribute to the ‘Geographic Tradition’ (Livingstone 1992) by harnessing the power of modern computers and open source software.

The book’s links to older disciplines were reflected in suggested titles for the book: Geography with R and R for GIS. Each has advantages. The former conveys the message that it comprises much more than just spatial data: non-spatial attribute data are inevitably interwoven with geometry data, and Geography is about more than where something is on the map. The latter communicates that this is a book about using R as a GIS, to perform spatial operations on geographic data (Bivand, Pebesma, and Gómez-Rubio 2013). However, the term GIS conveys some connotations (see Table 1.1) which simply fail to communicate one of R’s greatest strengths: its console-based ability to seamlessly switch between geographic and non-geographic data processing, modeling and visualization tasks. By contrast, the term geocomputation implies reproducible and creative programming. Of course, (geocomputational) algorithms are powerful tools that can become highly complex. However, all algorithms are composed of smaller parts. By teaching you its foundations and underlying structure, we aim to empower you to create your own innovative solutions to geographic data problems.

1.2 Why use R for geocomputation?

Early geographers used a variety of tools including barometers, compasses and sextants to advance knowledge about the world (Wulf 2015). It was only with the invention of the marine chronometer in 1761 that it became possible to calculate longitude at sea, enabling ships to take more direct routes.

Nowadays such lack of geographic data is hard to imagine. Every smartphone has a global positioning (GPS) receiver and a multitude of sensors on devices ranging from satellites and semi-autonomous vehicles to citizen scientists incessantly measure every part of the world. The rate of data produced is overwhelming. An autonomous vehicle, for example, can generate 100 GB of data per day (The Economist 2016). Remote sensing data from satellites has become too large to analyze the corresponding data with a single computer, leading to initiatives such as OpenEO.

This ‘geodata revolution’ drives demand for high performance computer hardware and efficient, scalable software to handle and extract signal from the noise, to understand and perhaps change the world. Spatial databases enable storage and generation of manageable subsets from the vast geographic data stores, making interfaces for gaining knowledge from them vital tools for the future. R is one such tool, with advanced analysis, modeling and visualization capabilities. In this context the focus of the book is not on the language itself (see Wickham 2019). Instead we use R as a ‘tool for the trade’ for understanding the world, similar to Humboldt’s use of tools to gain a deep understanding of nature in all its complexity and interconnections (see Wulf 2015). Although programming can seem like a reductionist activity, the aim is to teach geocomputation with R not only for fun, but for understanding the world.

R is a multi-platform, open source language and environment for statistical computing and graphics (r-project.org/). With a wide range of packages, R also supports advanced geospatial statistics, modeling and visualization. New integrated development environments (IDEs) such as RStudio have made R more user-friendly for many, easing map making with a panel dedicated to interactive visualization.

At its core, R is an object-oriented, functional programming language (Wickham 2019), and was specifically designed as an interactive interface to other software (Chambers 2016). The latter also includes many ‘bridges’ to a treasure trove of GIS software, ‘geolibraries’ and functions (see Chapter ??). It is thus ideal for quickly creating ‘geo-tools’, without needing to master lower level languages (compared to R) such as C, FORTRAN or Java (see Section 1.3). This can feel like breaking free from the metaphorical ‘glass ceiling’ imposed by GUI-based or proprietary geographic information systems (see Table 1.1 for a definition of GUI). Furthermore, R facilitates access to other languages: the packages Rcpp and reticulate enable access to C++ and Python code, for example. This means R can be used as a ‘bridge’ to a wide range of geospatial programs (see Section 1.3).

Another example showing R’s flexibility and evolving geographic capabilities is interactive map making. As we’ll see in Chapter ??, the statement that R has “limited interactive [plotting] facilities” (Bivand, Pebesma, and Gómez-Rubio 2013) is no longer true. This is demonstrated by the following code chunk, which creates Figure 1.1 (the functions that generate the plot are covered in Section ??).

library(leaflet)

popup = c("Robin", "Jakub", "Jannes")

leaflet() %>%

addProviderTiles("NASAGIBS.ViirsEarthAtNight2012") %>%

addMarkers(lng = c(-3, 23, 11),

lat = c(52, 53, 49),

popup = popup)FIGURE 1.1: The blue markers indicate where the authors are from. The basemap is a tiled image of the Earth at night provided by NASA. Interact with the online version at geocompr.robinlovelace.net, for example by zooming in and clicking on the popups.

It would have been difficult to produce Figure 1.1 using R a few years ago, let alone as an interactive map. This illustrates R’s flexibility and how, thanks to developments such as knitr and leaflet, it can be used as an interface to other software, a theme that will recur throughout this book. The use of R code, therefore, enables teaching geocomputation with reference to reproducible examples representing real world phenomena, rather than just abstract concepts.

1.3 Software for geocomputation

R is a powerful language for geocomputation but there are many other options for geographic data analysis providing thousands of geographic functions. Awareness of other languages for geocomputation will help decide when a different tool may be more appropriate for a specific task, and place R in the wider geospatial ecosystem. This section briefly introduces the languages C++, Java and Python for geocomputation, in preparation for Chapter ??.

An important feature of R (and Python) is that it is an interpreted language. This is advantageous because it enables interactive programming in a Read–Eval–Print Loop (REPL): code entered into the console is immediately executed and the result is printed, rather than waiting for the intermediate stage of compilation. On the other hand, compiled languages such as C++ and Java tend to run faster (once they have been compiled).

C++ provides the basis for many GIS packages such as QGIS, GRASS and SAGA so it is a sensible starting point. Well-written C++ is very fast, making it a good choice for performance-critical applications such as processing large geographic datasets, but is harder to learn than Python or R. C++ has become more accessible with the Rcpp package, which provides a good ‘way in’ to C programming for R users. Proficiency with such low-level languages opens the possibility of creating new, high-performance ‘geoalgorithms’ and a better understanding of how GIS software works (see Chapter ??).

Java is another important and versatile language for geocomputation. GIS packages gvSig, OpenJump and uDig are all written in Java. There are many GIS libraries written in Java, including GeoTools and JTS, the Java Topology Suite (GEOS is a C++ port of JTS). Furthermore, many map server applications use Java including Geoserver/Geonode, deegree and 52°North WPS.

Java’s object-oriented syntax is similar to that of C++. A major advantage of Java is that it is platform-independent (which is unusual for a compiled language) and is highly scalable, making it a suitable language for IDEs such as RStudio, with which this book was written. Java has fewer tools for statistical modeling and visualization than Python or R, although it can be used for data science (Brzustowicz 2017).

Python is an important language for geocomputation especially because many Desktop GIS such as GRASS, SAGA and QGIS provide a Python API (see Chapter ??). Like R, it is a popular tool for data science. Both languages are object-oriented, and have many areas of overlap, leading to initiatives such as the reticulate package that facilitates access to Python from R and the Ursa Labs initiative to support portable libraries to the benefit of the entire open source data science ecosystem.

In practice both R and Python have their strengths and to some extent which you use is less important than the domain of application and communication of results. Learning either will provide a head-start in learning the other. However, there are major advantages of R over Python for geocomputation. This includes its much better support of the geographic data models vector and raster in the language itself (see Chapter 2) and corresponding visualization possibilities (see Chapters 2 and ??). Equally important, R has unparalleled support for statistics, including spatial statistics, with hundreds of packages (unmatched by Python) supporting thousands of statistical methods.

The major advantage of Python is that it is a general-purpose programming language. It is used in many domains, including desktop software, computer games, websites and data science. Python is often the only shared language between different (geocomputation) communities and can be seen as the ‘glue’ that holds many GIS programs together. Many geoalgorithms, including those in QGIS and ArcMap, can be accessed from the Python command line, making it well-suited as a starter language for command-line GIS.3

For spatial statistics and predictive modeling, however, R is second-to-none. This does not mean you must choose either R or Python: Python supports most common statistical techniques (though R tends to support new developments in spatial statistics earlier) and many concepts learned from Python can be applied to the R world. Like R, Python also supports geographic data analysis and manipulation with packages such as osgeo, Shapely, NumPy and PyGeoProcessing (Garrard 2016).

1.4 R’s spatial ecosystem

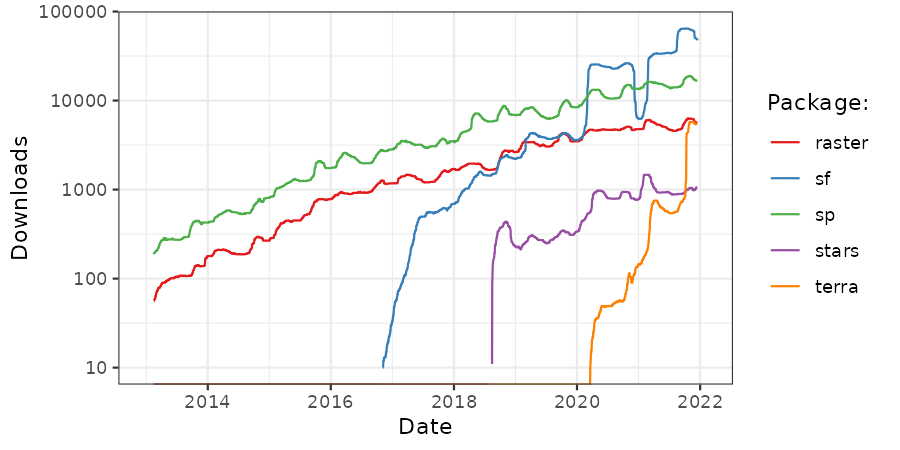

There are many ways to handle geographic data in R, with dozens of packages in the area.4 In this book we endeavor to teach the state-of-the-art in the field whilst ensuring that the methods are future-proof. Like many areas of software development, R’s spatial ecosystem is rapidly evolving (Figure 1.2). Because R is open source, these developments can easily build on previous work, by ‘standing on the shoulders of giants’, as Isaac Newton put it in 1675. This approach is advantageous because it encourages collaboration and avoids ‘reinventing the wheel’. The package sf (covered in Chapter 2), for example, builds on its predecessor sp.

A surge in development time (and interest) in ‘R-spatial’ has followed the award of a grant by the R Consortium for the development of support for Simple Features, an open-source standard and model to store and access vector geometries. This resulted in the sf package (covered in Section ??). Multiple places reflect the immense interest in sf. This is especially true for the R-sig-Geo Archives, a long-standing open access email list containing much R-spatial wisdom accumulated over the years.

FIGURE 1.2: Downloads of selected R packages for working with geographic data. The y-axis shows average number of downloads per day, within a 91-day rolling window.

It is noteworthy that shifts in the wider R community, as exemplified by the data processing package dplyr (released in 2014) influenced shifts in R’s spatial ecosystem. Alongside other packages that have a shared style and emphasis on ‘tidy data’ (including, e.g., ggplot2), dplyr was placed in the tidyverse ‘metapackage’ in late 2016. The tidyverse approach, with its focus on long-form data and fast intuitively named functions, has become immensely popular. This has led to a demand for ‘tidy geographic data’ which has been partly met by sf. An obvious feature of the tidyverse is the tendency for packages to work in harmony. There is no equivalent geoverse, but there are attempts at harmonization between packages hosted in the r-spatial organization and a growing number of packages use sf (Table 1.2).

| Package | Downloads |

|---|---|

| spdep | 1413 |

| lwgeom | 1163 |

| stars | 960 |

| leafem | 898 |

| tmap | 841 |

Parallel group of developments relates to the rspatial set of packages.5 Its main member is the terra package for spatial raster handling (see Section ??).

1.5 The history of R-spatial

There are many benefits of using recent spatial packages such as sf, but it also important to be aware of the history of R’s spatial capabilities: many functions, use-cases and teaching material are contained in older packages. These can still be useful today, provided you know where to look.

R’s spatial capabilities originated in early spatial packages in the S language (Bivand and Gebhardt 2000). The 1990s saw the development of numerous S scripts and a handful of packages for spatial statistics. R packages arose from these and by 2000 there were R packages for various spatial methods “point pattern analysis, geostatistics, exploratory spatial data analysis and spatial econometrics”, according to an article presented at GeoComputation 2000 (Bivand and Neteler 2000). Some of these, notably spatial, sgeostat and splancs are still available on CRAN (B. S. Rowlingson and Diggle 1993; B. Rowlingson and Diggle 2017; Venables and Ripley 2002; Majure and Gebhardt 2016).

A subsequent article in R News (the predecessor of The R Journal) contained an overview of spatial statistical software in R at the time, much of which was based on previous code written for S/S-PLUS (Ripley 2001). This overview described packages for spatial smoothing and interpolation, including akima and geoR (Akima and Gebhardt 2016; Jr and Diggle 2016), and point pattern analysis, including splancs (B. Rowlingson and Diggle 2017) and spatstat (Baddeley, Rubak, and Turner 2015).

The following R News issue (Volume 1/3) put spatial packages in the spotlight again, with a more detailed introduction to splancs and a commentary on future prospects regarding spatial statistics (Bivand 2001). Additionally, the issue introduced two packages for testing spatial autocorrelation that eventually became part of spdep (Bivand 2017). Notably, the commentary mentions the need for standardization of spatial interfaces, efficient mechanisms for exchanging data with GIS, and handling of spatial metadata such as coordinate reference systems (CRS).

maptools (written by Nicholas Lewin-Koh; Bivand and Lewin-Koh (2017)) is another important package from this time. Initially maptools just contained a wrapper around shapelib and permitted the reading of ESRI Shapefiles into geometry nested lists. The corresponding and nowadays obsolete S3 class called “Map” stored this list alongside an attribute data frame. The work on the “Map” class representation was nevertheless important since it directly fed into sp prior to its publication on CRAN.

In 2003 Roger Bivand published an extended review of spatial packages. It proposed a class system to support the “data objects offered by GDAL”, including ‘fundamental’ point, line, polygon, and raster types. Furthermore, it suggested interfaces to external libraries should form the basis of modular R packages (Bivand 2003). To a large extent these ideas were realized in the packages rgdal and sp. These provided a foundation for spatial data analysis with R, as described in Applied Spatial Data Analysis with R (ASDAR) (Bivand, Pebesma, and Gómez-Rubio 2013), first published in 2008. Ten years later, R’s spatial capabilities have evolved substantially but they still build on ideas set-out by Bivand (2003): interfaces to GDAL and PROJ, for example, still power R’s high-performance geographic data I/O and CRS transformation capabilities (see Chapters 7 and ??, respectively).

rgdal, released in 2003, provided GDAL bindings for R which greatly enhanced its ability to import data from previously unavailable geographic data formats. The initial release supported only raster drivers but subsequent enhancements provided support for coordinate reference systems (via the PROJ library), reprojections and import of vector file formats (see Chapter ?? for more on file formats). Many of these additional capabilities were developed by Barry Rowlingson and released in the rgdal codebase in 2006 (see B. Rowlingson et al. 2003 and the R-help email list for context).

sp, released in 2005, overcame R’s inability to distinguish spatial and non-spatial objects (Pebesma and Bivand 2005). sp grew from a workshop in Vienna in 2003 and was hosted at sourceforge before migrating to R-Forge. Prior to 2005, geographic coordinates were generally treated like any other number. sp changed this with its classes and generic methods supporting points, lines, polygons and grids, and attribute data.

sp stores information such as bounding box, coordinate reference system and attributes in slots in Spatial objects using the S4 class system,

enabling data operations to work on geographic data (see Section ??).

Further, sp provides generic methods such as summary() and plot() for geographic data.

In the following decade, sp classes rapidly became popular for geographic data in R and the number of packages that depended on it increased from around 20 in 2008 to over 100 in 2013 (Bivand, Pebesma, and Gómez-Rubio 2013).

As of 2018 almost 500 packages rely on sp, making it an important part of the R ecosystem.

Prominent R packages using sp include: gstat, for spatial and spatio-temporal geostatistics; geosphere, for spherical trigonometry; and adehabitat used for the analysis of habitat selection by animals (Pebesma and Graeler 2018; Calenge 2006; Hijmans 2016).

While rgdal and sp solved many spatial issues, R still lacked the ability to do geometric operations (see Chapter ??).

Colin Rundel addressed this issue by developing rgeos, an R interface to the open-source geometry library (GEOS) during a Google Summer of Code project in 2010 (Bivand and Rundel 2018).

rgeos enabled GEOS to manipulate sp objects, with functions such as gIntersection().

Another limitation of sp — its restricted support for raster data — was overcome by raster, first released in 2010 (Hijmans 2017). Its class system and functions support a range of raster operations as outlined in Section ??. A key feature of raster is its ability to work with datasets that are too large to fit into RAM (R’s interface to PostGIS supports off-disk operations on vector geographic data). raster also supports map algebra (see Section ??).

In parallel with these developments of class systems and methods came the support for R as an interface to dedicated GIS software. GRASS (Bivand 2000) and follow-on packages spgrass6 and rgrass7 (for GRASS GIS 6 and 7, respectively) were prominent examples in this direction (Bivand 2016a, 2016b). Other examples of bridges between R and GIS include RSAGA (Brenning, Bangs, and Becker 2018, first published in 2008), RPyGeo (Brenning 2012, first published in 2008), RQGIS (Muenchow, Schratz, and Brenning 2017, first published in 2016), and rqgisprocess (see Chapter ??).

Visualization was not a focus initially, with the bulk of R-spatial development focused on analysis and geographic operations.

sp provided methods for map making using both the base and lattice plotting system but demand was growing for advanced map making capabilities.

RgoogleMaps first released in 2009, allowed to overlay R spatial data on top of ‘basemap’ tiles from online services such as Google Maps or OpenStreetMap (Loecher and Ropkins 2015).

It was followed by the ggmap package that added similar ‘basemap’ tiles capabilities to ggplot2 (Kahle and Wickham 2013).

Though ggmap facilitated map-making with ggplot2, its utility was limited by the need to fortify spatial objects, which means converting them into long data frames.

While this works well for points it is computationally inefficient for lines and polygons, since each coordinate (vertex) is converted into a row, leading to huge data frames to represent complex geometries.

Although geographic visualization tended to focus on vector data, raster visualization is supported in raster and received a boost with the release of rasterVis, which is described in a book on the subject of spatial and temporal data visualization (Lamigueiro 2018).

As of 2018 map making in R is a hot topic with dedicated packages such as tmap, leaflet and mapview all supporting the class system provided by sf, the focus of the next chapter (see Chapter ?? for more on visualization).

Since 2018, a movement of modernizing basic R packages related to handling spatial data has continued. terra – a successor of the raster package aimed at better performance and more straightforward user interface was firstly released (see Chapter ??) in 2020 (Hijmans 2021). In mid-2021, a significant change was made to the sf package by incorporating spherical geometry calculations. Since this change, by default, many spatial operations on data with geographic CRSs apply the C++ s2geometry library’s spherical geometry algorithms, while these types of operations on data with projected CRSs are still using GEOS. New ideas about spatial data representations were also being developed in this period. It includes the stars package, closely connected to sf, for handling raster and vector data cubes (Pebesma 2021) and lidR for processing of airborne LiDAR (Light Detection and Ranging) point clouds (Roussel et al. 2020).

This modernization had several reasons, including the emergence of new technologies and standard, and the impacts from spatial software development outside of the R environment (Bivand 2021).

The most important external factor affecting most spatial software, including R spatial packages, were the major updates, including many breaking changes to the PROJ library that had begun in 2018.

Most importantly, these changes forced the replacement of proj4string to WKT representation for storage of coordinate reference systems and coordinates operations (learn more in Section 2.4 and Chapter 7).

Since 2018, the progress of spatial visualization tools in R has been related to a few factors.

Firstly, new types of spatial plots were developed, including the rayshader package offering a combination of raytracing and multiple hill-shading methods to produce 2D and 3D data visualizations (Morgan-Wall 2021).

Secondly, ggplot2 gained new spatial capabilities, mostly thanks to the ggspatial package that adds some spatial visualization elements, including scale bars and north arrows (Dunnington 2021) and gganimate that enables smooth and customizable spatial animations (Pedersen and Robinson 2020).

Thirdly, performance of visualizing large spatial dataset was improved.

This especially relates to automatic plotting of downscaled rasters in tmap and the possibility of using high-performance interactive rendering platforms in the mapview package, such as "leafgl" and "mapdeck".

Lastly, some of the existing mapping tools have been rewritten to minimize dependencies, improve user interface, or allow for easier creation of extensions.

This includes the mapsf package (successor of cartography) (Giraud 2021) and version 4 of the tmap package, in which most of the internal code was revised.

In late 2021, the planned retirement of rgdal, rgeos and maptools at the end of 2023 was announced on the R-sig-Geo mailing list by Roger Bivand. This would have a large impact on existing workflows applying these packages, but also will influence the packages that depend on rgdal, rgeos or maptools. Therefore, Bivand’s suggestion is to plan a transition to more modern tools, including sf and terra, as explained in this book’s next chapters.

1.6 Exercises

E1. Think about the terms ‘GIS’, ‘GDS’ and ‘geocomputation’ described above. Which (if any) best describes the work you would like to do using geo* methods and software and why?

E2. Provide three reasons for using a scriptable language such as R for geocomputation instead of using a graphical user interface (GUI) based GIS such as QGIS.

E3. Think about a real world problem you would like to solve with geographic data that could help people living in your local area and sketch a map of the geographic processes involved.

E4. Consider the datasets needed to represent the problem computationally and sketch a workflow for processing them, resulting in outputs that could help inform decision making related to the problem you thought of in the previous exercise. Use a pen and paper or a digital sketching tool such as Excalidraw.